HTML

Styling

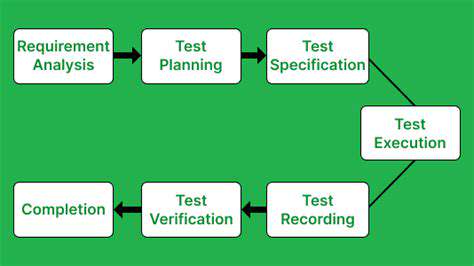

Hardware Selection

Performance Optimization

System Design

CSS

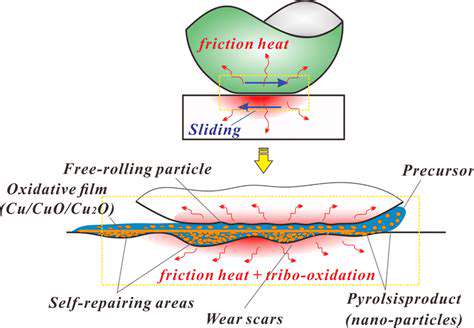

Rubberdichtingsmiddel: Scheuren voorkomen

Veelvoorkomende oorzaken van scheuren in rubberafdichtingen

Prestaties optimaliseren: De juiste conditioner kiezen

De juiste hardware kiezen

De selectie van de juiste hardwarecomponenten is essentieel voor het optimaliseren van

Ver voorbij de basis: Een uitgebreid onderhoudsbeleid implementeren

Ver voorbij de fundamentele aspecten: Verbeter uw aanpak

Implementatie van een r

Read more about Rubberdichtingsmiddel: Scheuren voorkomen

Verleng de levensduur en verbeter de prestaties. Ontdek de essentiële praktijken voor het onderhouden van de gezondheid van de batterij van uw hybride auto. Regelmatige onderhoudscontroles, inclusief het begrijpen van de batterijcomponenten en het monitoren van hun prestaties, kunnen de levensduur van de batterij aanzienlijk verlengen. Leer het belang van periodieke inspecties om potentiële problemen vroegtijdig te identificeren en dure reparaties te voorkomen. Begrijp de waarde van optimale oplaadgewoonten en de impact van omgevingsomstandigheden op de batterij efficiëntie. Verken de beste praktijken om uw hybride batterij schoon en geïsoleerd van vocht te houden, evenals de voordelen van het gebruik van regeneratieve remtechnologie. Blijf op de hoogte van de dashboardwaarschuwingen van uw hybride voertuig om eventuele prestatieproblemen snel op te vangen. Door een proactieve aanpak voor de zorg voor uw hybride batterij aan te nemen, kunt u de rij efficiëntie verbeteren en op de lange termijn geld besparen. Blijf lezen om meer te leren over de beste praktijken en geavanceerde technieken die u helpen uw hybride batterij optimaal te laten functioneren.

Mar 13, 2025

De invloed van synthetische smeeroliën op de transmissie-efficiëntie

May 03, 2025

De rol van thermische management in high-performance voertuigen

May 04, 2025

Waarom regelmatige inspectie van uitlaatsystemen essentieel is

May 06, 2025

Evaluatie van de effectiviteit van aftermarket-veringsets

May 12, 2025

Tips voor het herstellen van de helderheid van vertroebelde of gekraakte koplampen

May 12, 2025

Geavanceerde storingen van het aandrijflijnstuurmodule

May 16, 2025

Geavanceerde technieken voor het voorkomen van interne corrosie in motoren

May 17, 2025

Omvattende onderhoudschema's voor voertuigen met een hoog kilometerstand

May 21, 2025

Afstandsstart installatie: Gemak bij elk weer

Jun 11, 2025

Aangepaste motorruimte-styling: Showcar-klaar

Jul 07, 2025

Waterstofbrandstofcelauto's: Het alternatief

Jul 13, 2025